Much has recently been written about the poor state of scientific literacy in the U.S, and the media’s role in that downward slide. At the same time, ‘industrial’ or ‘conventional’ agriculture, and the real, live farmers that contribute to it, continues to be damned by activists, non-governmental organizations, and enlightened people on social media. Similarly, academic objectivism has been hijacked by academic activism to provide an air of credibility to those who wish to portray the present farm and food system as in shambles. A recent journal article is making the rounds that exemplifies these concepts, and highlights many of the problems with both public and academic activism as it relates to and affects farmers and consumers.

The article posted in the Journal of the American Medical Association (JAMA), “Association Between Pesticide Residue Intake From Consumption of Fruits and Vegetables and Pregnancy Outcomes Among Women Undergoing Infertility Treatment With Assisted Reproductive Technology” (Chiu, Williams, Gillman, & et al., 2017) has been shared in social media and discussed in popular scientific venues quite a bit since its publication in late October 2017. (I won’t mention the shares it’s had among activist and issue-oriented websites like thenatpath.com, davidwolfe.com, mercola.com, organicconsumers.org, etc…their response to this confirmation bias is to be expected).

- Science Daily reports, “Eating more fruits and vegetables with high-pesticide residue was associated with a lower probability of pregnancy and live birth following infertility treatment for women using assisted reproductive technologies.”

- Huffington Post offers the headline, “Trying to get pregnant? Science suggests: eat organic and regulate the pesticide industry.” (Article written by Stacy Malkan, Co-director of the activist group U.S. Right to Know)

- Reuters? “Women who eat more fruits and vegetables with high levels of pesticide residue may be less likely to get pregnant than women whose diets don’t include a lot of this type of produce, a U.S. study suggests.”

- Modern Farmer says, “High-Pesticide Fruits and Vegetables Correlated with Lower Fertility Rates, Says Study.”

- WebMD leads their story with, “Couples who are trying to have children should probably be picky about their produce, a new study suggests…”

- Hell, even Nature got in on the story, “…Reproductive endocrinology: Exposure to pesticide residues linked to adverse pregnancy outcomes…”

The headlines and statements pulled from these articles all make it sound like we have a crisis on our hands, that our food supply has been shown by science to be unsafe, and pesticides are to blame. But let’s look at this paper a bit closer, with a skeptical and statistical focus.

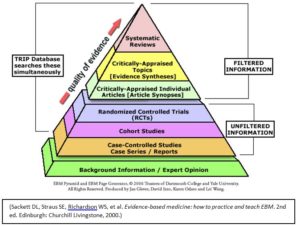

This was an observational, case control study. That means that the independent variable of concern, in this case, pesticide residue intake, is not under the control of the researcher. That doesn’t invalidate the study, but it immediately makes it a weak one. A group of 325 women who were receiving assisted reproduction treatments (in vitro fertilization, IVF) were polled about their diet for the past three months prior to assessment, as well as for other potential factors. Fruits and vegetables (FVs) were tallied and compared to USDA pesticide residue database, using similar protocols as the Environmental Working Group’s ‘Dirty Dozen’ list. That annual report that highlights many conventionally-grown produce items as dangerously contaminated with pesticide residue has been heavily criticized as intransparent to its methodology; flawed in its calculation of risk; and sensationalized in its overhyping of meaningful results (Fenner-Crisp, Keen, Richardson, Richardson, & Rozman, 2010; Winter & Katz, 2011). In this present study, a cumulative risk calculation, “Pesticide Residue Burden Score” (PBRS), is tallied and assigned to each subject. While the PBRS is cited as having been previously used as a valid assessment technique in prior studies, all of those citations are from the same author’s lab group, which indicates that this categorical assigned value has little use in the authors’ field of study has not been replicated it in another study by another lab group.

Quibbling about statistical design aside, let me break it down here. The primary independent treatment variable, pesticide exposure (PBRS), is derived from: a) a retrospective questionnaire (how many of us could accurately say what we ate for the last three months?); b) a dataset of pesticide residue collected from a very small sampling of fruits and vegetables grown all over the world with no relation to how those growing conditions may affect actual pesticide load of the individual subjects nor of the variability of residues within samples; and c) were translated by the authors using arbitrary criteria into essentially a “big” and a “little” treatment. The number of jumps in the assumption train is boggling here, and we generally consider assumptions made based on assumptions of assumptions as a pretty poor way to assign treatment variables. The researchers could have done a simple, quantitative test here to confirm their assignment of exposure risk: measured pesticide metabolites in urine. That one step would have made for a stronger study, assuming it didn’t completely invalidate the PBRS treatments that were selected, which is entirely possible.

The dependent variables were more easily measured, discreet data points, things like number of embryos transferred, number implanted, clinical pregnancies, and live births.

Then, lots of ‘cofounders’, or other factors affecting the dependent variables are discussed, including age, body mass index, race (but only ‘white’ race, yes or no), education, smoking history, organic food intake (self-reported, and also placed on an arbitrary categorical scale), and intake of thinks like alcohol, caffeine, and vitamins. So it seems that they screened out the other things that are well-known to impact pregnancy outcomes and are able to look only at the effects of PBRS, right? Sort of. Looking at Table 2, we see that in the ‘High residue’ group, the highest quartile (e.g. arbitrarily determined highest pesticide exposure) group had a statistically greater incidence of organic food intake than the lowest-residue (quartile 1) group. Yet, the paper explains in the discussion that eating organic food may decrease pesticide exposure, which is the complete opposite of the statistics presented in the paper. Similarly, we find statistically significant cofounders between populations all over the place, including various vitamin intake levels, infertility levels (HUGE problem …study should have been finished right there), and smoking rates.

The authors ran a bunch of correlation tests, which by design only point to associations between data points without any test for causation. Consider the popular correlation site Spurious Correlations that provides reported links between the number of films that Nicholas Cage appeared in and the number of people who drowned in a pool, based on actual data and associated correlations between variables. This highlights that correlation indeed does not mean causation; that the experimental variables were so vaguely defined and arbitrarily selected further highlights that there is little to no meaning to be drawn from the weak statistics used in the article. The authors mention adjustments made to “the model” to account for the cofounders, but the model isn’t made available in the actual article to evaluate. Sorry, that’s poor form for such a groundbreaking research paper, and indicates not only a lack of transparency but also a lack of scientific rigor with the entire premise of the article.

However, let’s assume that the model they developed to compare the arbitrary PBRS levels to pregnancy outcomes was valid. The outcome variables analyzed were a computed confidence interval (CI) for the various pregnancy outcomes (implanted egg, pregnancy, live birth). CIs are a commonly-used metric for guessing how correct your estimation is based on your sample mean and the variance among samples. Conducting a statistical test on computed Cis derived from assumptions of assumed assumptions (that’s a loss of four levels of freedom for the stats folks) makes this analysis a complete shot in the dark as far as having any statistical validity.

Given all of the problems with this study, it’s amazing that it was ever even published. The authors did give themselves an out, though. In the ‘Limitations’ section, they state:

“Our study has some limitations. First, exposure to pesticides was not directly assessed but was rather estimated from self-reported FV intake paired with pesticide residue surveillance data. Although we have adjusted for organic FV intake, data on whether individual FVs were consumed as organic or conventional were not collected, possibly leading to exposure misclassification…Second, our methodology does not allow linking specific pesticides to adverse reproductive effects. Further confirmation studies, preferably accounting for common chemical mixtures used in agriculture by biomarkers, are needed. Third, as in all observational studies, we cannot rule out the possibility that residual (e.g., significant differences in organic FV consumption across quartiles of high–pesticide residue FV intake) or unmeasured confounding may still be explaining some of our observed associations…”

How about this explanation: a group of scientists began their study on pregnancy outcomes with a highly risky population, as far as their dependent variables were concerned (women undergoing fertility treatment); made up arbitrary High and Low risk groups based on assumed data with no verification of actual exposure; ‘accounted’ for cofounding variables that were significantly different across the treatment groups left the model out of the paper (“just trust us”), and overstated conclusions based on widely varying Confidence Intervals (calculated guesses for actual ranges among populations) of dependent outcomes.

This is, at best, a ‘hypothesis study”- something that sets further research down a path of study to determine what might be going on in a set of data that shows a certain trend. More realistically, it’s an activist-science fishing mission fed to a receptive media, and they have gobbled it up and spit it out. The saddest part of the story is that reputable media outlets with stretched staff and few reporters with scientific background and time to apply it are just parroting the press releases accompanying the paper.

And we, again, see the public discourse dumbed down, and we see the finger pointed at conventional farmers, with organic farmers offered an undeserved (going strictly on the evidence presented) halo. No one is saying that there shouldn’t be careful scrutiny applied to pesticides, or to any facet of agriculture that entails risk to both farmers and consumers. But this study, the conclusions it presents based on fairly weak evidence, and the media promotion around it aren’t adding much of substance to the conversation.

Chiu, Y., Williams, P. L., Gillman, M. W., & et al. (2017). Association between pesticide residue intake from consumption of fruits and vegetables and pregnancy outcomes among women undergoing infertility treatment with assisted reproductive technology. JAMA Internal Medicine. doi:10.1001/jamainternmed.2017.5038

Fenner-Crisp, P., Keen, C., Richardson, J., Richardson, R., & Rozman, K. (2010). A Review of the Science on the Potential Health Effects of Pesticide Residues on Food and Related Statements Made by Interest Groups. Retrieved from http://safefruitsandveggies.com/sites/default/files/expert-panel-report.pdf

Winter, C. K., & Katz, J. M. (2011). Dietary exposure to pesticide residues from commodities alleged to contain the highest contamination levels. Journal of toxicology, 2011.